CXL 4.0 Released: Bandwidth Doubled

CXL 4.0 doubles bandwidth to 128 GT/s, unlocks pooled memory & composable AI infrastructure for LLM and HPC workloads.

“ChipPub” Publication: 20% Discount Offer Link.

Digital storage and memory technologies are critical enablers of high-performance computing, including artificial intelligence. At the recent SC25 conference, several groundbreaking advancements in digital storage and memory technologies were unveiled. This article focuses on the latest-generation Compute Express Link (CXL) technology and the NVIDIA-certified solutions released by DDN, all designed to support autonomous artificial intelligence.

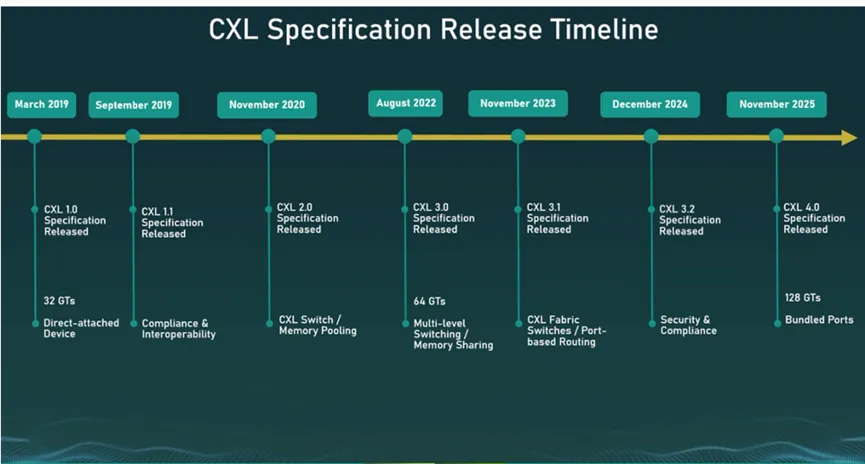

The CXL Consortium is responsible for maintaining the Compute Express Link (CXL) interconnect specification, which enables high-speed, high-capacity connections between CPUs and devices, as well as CPUs and memory. It expands server memory capacity and allows memory pooling beyond the limits of traditional DIMM slots. Since its initial release in 2019, the CXL specification has undergone multiple updates, as shown in the timeline below.

At SC25, the CXL Consortium officially released the CXL 4.0 specification. The main features of this new standard are as follows: